Self-driving, safe or safer?

Last updated 10-Sep-2024

Do self-driving cars need to be "safe" or just "safer than humans"? Will any accidents and deaths as a result of a failure of self-driving technology be tolerated, even if at a much lower rate than humans today? Despite the self-driving levels of capability being reasonably well defined, there is little debate about the performance thresholds which need to be achieved. This article explores how safe is safe enough or whether self-driving cars need to be just safe, in the same way you'd expect getting onto a train or flying in an airplane to be safe.

Before we delve into the detail, we've written before regarding the language and the need to be clearer around terminology. We prefer to distinguish between the "responsibility" and "accountability" of driving to help differentiate the roles. "Responsible" is the person, computer or function that is doing the task, whereas "accountability" is the person, computer or thing ensuring that it is done correctly, and in doing so is legally responsible for the safety. By using different words for these two aspects helps avoid the semantic confusion we often hear. It also emphasises the point that Level 3 onwards is a shift in "Accountability", irrespective of the amount of "responsibility" the car has with respect to driving activities.

Why do we want self-driving cars?

There are a number of reasons why self-driving cars (by which we mean Levels 3 and above, where the driver is not accountable or responsible for the driving, at least for some of the time) are desirable:

- The utility from shifting the accountability and responsibility to the car, enabling long distances to be covered in comfort, the owner to be unfit to drive or the car to drive without an occupant. It would allow the school run to be done by the car etc. It would give greater mobility to those with impairments such as the blind. Essentially, it would be like having a private driver.

- Self-driving cars will be safer than humans reducing the number of deaths and injuries.

- New business models would emerge such as robotic taxis that could work 24x7, and owners could send their cars out to work.

The safety argument is the one that this article is looking at in detail, and how safe is safe enough. Do self-driving cars just have to be safer than humans, or do they need to be as safe as trains and other forms of public transport where no accidents are tolerated?

Different approaches to achieving self-driving.

The approach to achieving self-driving capabilities vary by company, each differing in the balance of responsibility and accountability, their current level of maturity and how they transition both the responsibility and accountability between the driver and the car. There are 3 broad approaches being developed:

- A ground up, heavily managed, Level 4 solution. Waymo adopt such an approach with a managed fleet of self-driving taxis in a limited number of cities. These areas have been highly mapped and this seems to be a requirement for safe operation. The taxis assume both the responsibility and accountability for the driving and represent a true L4 approach and with no routine human interaction unless the system gets stuck. These systems are not commercially available to buy, although you can use the taxis. The forward challenge is to reduce the mapping dependency and make the hardware commercially viable.

- A heavily restricted Level 3 capability that is commercially available. Companies like Mercedes take this approach and their cars are severely constrained in their operating window when operating at Level 3. The car can also operate as a competent Level 2 system when the driver assumes accountability. The narrow operating window is down to an agreement with the regulators, lawyers, insurers etc, as well as the manufacturers own confidence in how well the systems can work. The forward roadmap is to widen the operating window of accountability as capability and confidence grows. One key aspect of this approach is the managed and not instantaneous handover of accountability between the car and the driver as the transition will be a fairly regular occurrence in operation.

- An all-encompassing Level 2 system and then transition to Level 4. This is the approach Tesla and their FSD (supervised) solution are taking and is available commercially today in the USA and Canada. In operation the cars currently assume all the responsibility for driving, but none of the accountability. The driver has full accountability at all times and is required to step in should the car get it wrong. Tesla have not detailed how they will transition to Level 4 other than to argue that once the system is "safer than humans", although how measured is still debateable, the accountability should move from the driver to the car.

Human drivers as a safety benchmark

The risk we experience when driving varies greatly on a whole host of factors, some based on our geography, others are based on our individual demographic within our respective societies.

The global death toll from road accidents is thought to be about 1.2 million people per year, however the distribution of those deaths is far from even and your personal risk varies enormously depending on which country you are in.

- Norway, 1.76 people die per 100k of population per year

- UK, it's 2.8 people per 100k

- Germany, it's 2.9 people per 100k

- US, it's 11.1 people per 100k

- India, it's 16.3 people per 100k

- South Africa, it's 22.7 people per 100k

- Kenya, it's 48 people per 100k

There will be a number of factors that may come into play here, distances travelled per person can vary, plus economic factors such as the quality of the roads and the typical cars in use including the level of maintenance that is performed on those. There will also be factors regarding a region’s general health and safety maturity, and for the purposes of this article we'll focus on mature countries in this respect. Taking a simple average is not appropriate.

Personal risk is also not uniform across a population within a country. Various reports including by the UK Government and the OECD show:

- The death rate of under 25 year olds is almost double that of the general population.

- Drivers over 70 also show an increased rate of accidents.

- 25% of (European) accidents involve alcohol.

- Other reports show drug use and excess speed are also significant factors.

- Motorcyclists have a greater risk of being killed or seriously injured than car drivers.

- One in five new drivers will crash within a year of passing their official driving test.

Whilst there is often little you can do to protect yourself from being the victim of a car accident driven by say a drunk driver, personal risk is not uniform and can be heavily determined by things you can control, such as how fast you drive or whether you drink alcohol.

Consequently, if we are to set the population average as the benchmark, or even 3x better than average, or 2 standard deviations, some will still be at greater risk from self-driving cars than if they drove themselves. But is a tolerance to any accident acceptable?

Accident reduction or different accidents?

The introduction of passive safety systems, including antilock braking, traction control, forward collision warnings, lane departure warnings etc. all resulted in a reduction in accidents that would have otherwise occurred. The human driver makes an error of judgement, some feature about the car steps in and the accident is avoided. If the driver does not make a mistake, the systems do not need to intervene. It's therefore logical that these passive safety systems are cumulative, and irrespective of how safe you are as a driver, these systems will only make you safer, assuming you don't take liberties because of them.

When we think about self-driving cars, or more specifically when the car is responsible for all driving activities irrespective of whether the driver or the car is accountable, the picture is different. Essentially a different set of potential accidents are likely to occur due to errors of judgement by the software. It could be a system glitch, a dirty camera, a misinterpreted road sign, misjudging the speed of an approaching car, a mapping error, a whole host of scenarios that differ to the errors that humans make. Self-driving cars are likely to have different accidents to humans, irrespective of the frequency of the accidents.

Tesla FSD (supervised) performance

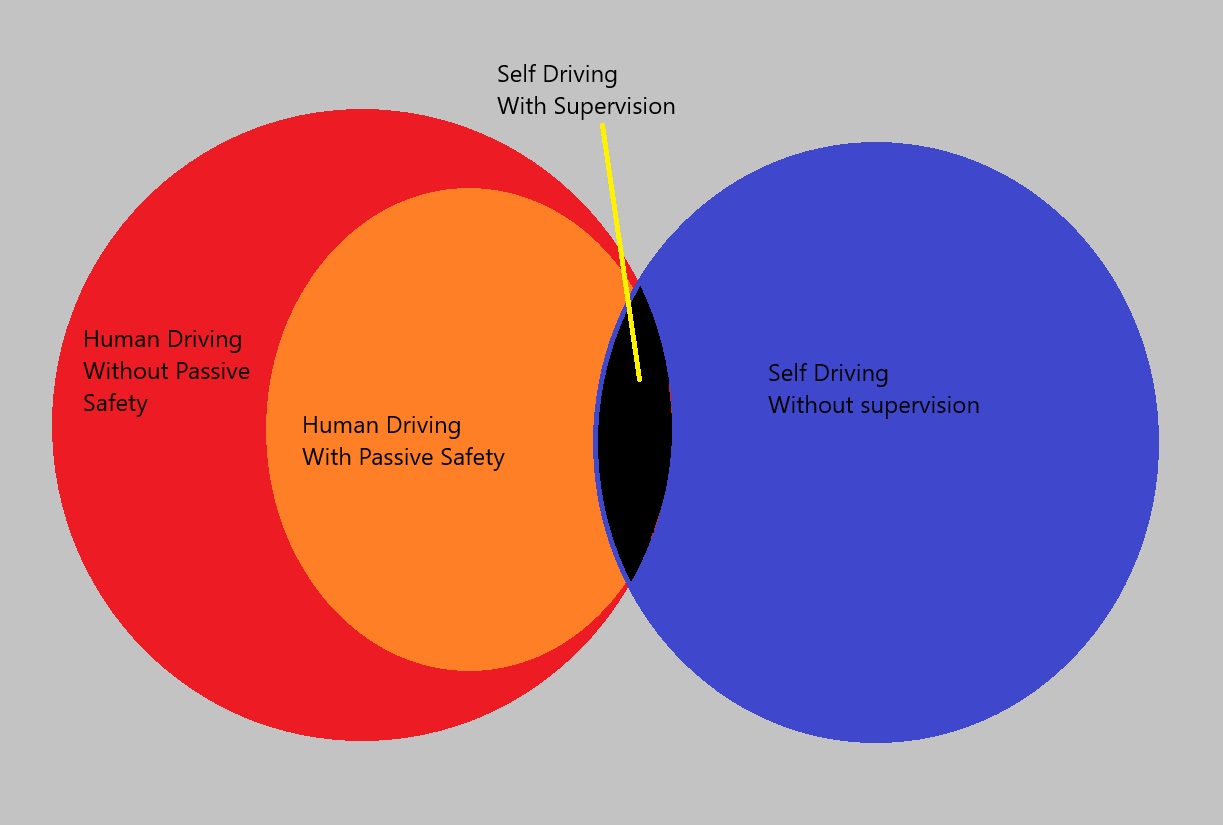

The Tesla FSD (supervised) is in essence the combination of both worlds and is the intersect on the chart.

For an accident to occur, the self-driving system has to fail, and the human driver has to fail and not intervene. As previously explained there is not a significant overlap between human and car caused accidents.

Using the safety data reported by Tesla, FSD supervised is approx. 4x safer than humans driving a Tesla. These accidents include incidents both where the Tesla is at fault and incidents where the Tesla was an unwilling victim of an error by another vehicle. The incidents where the Tesla system is at fault is the intersect where both the car has failed and the human has failed to step in. Those 7 million miles between accidents stated in the Tesla safety report represent an accident about every 450 years when using FSD (supervised) v 100 years without.

Anecdotal evidence however suggests even individual journeys are fairly rare without an intervention. Assuming a significant intervention rate of 1 per week, which still seems aspirational, this represents 22,500 interventions (50 per year x 450 years) between accidents, assuming all the accidents were the fault of the Tesla.

In practice, some of the accidents will be due to 3rd party mistakes, but that just makes the situation worse. If FSD (supervised) was the cause of an accident every 70 million miles, 10x the current figure of all accidents irrespective of cause, the number of human interventions with FSD (supervised) between accidents would be close to 250k on the basis of 1 meaningful intervention currently per week.

The chart shows the distribution of accidents, including the reduction due to passive safety systems, and the intersect which is self driving cars and where humans have failed to intervene, essentially the Tesla FSD (supervised) danger area. Without the supervision, the self driving accidents would be the larger area to the right.

Public transport the real benchmark?

Tesla argue the cars just need to be X times safer than humans, but is that an appropriate benchmark?

When we use public transport or 3rd party resources we expect complete safety. Nobody gets into an elevator and thinks as long as they have a lower chance of dying compared to a typical human climbing the stairs and having a medical episode, they're happy to accept the risk. You expect 100% safety in a lift. But this is essentially the argument Tesla propose.

If we look at other forms of transport such as rail travel, they have a zero tolerance to accidents as a result of a systemic failure of the railway system. Of course, accidents do occur, but looking at the safety data shows that the vast majority of accidents in countries with strong health and safety standards tend to relate to suicide, level crossings where vehicles have blocked the railway line, and some workforce accidents during maintenance. These are accidents where the cause is an outside actor. Accidents as a result of a system failure such as two trains on the same track rarely happen, make headline news globally and can result in a full-scale enquiry.

Exactly the same can be said for air travel. Accidents from either airline failure or traffic control issues are exceedingly rare, and when they do occur they are subject to significant investigation to identify the root cause and eliminate it from occurring again.

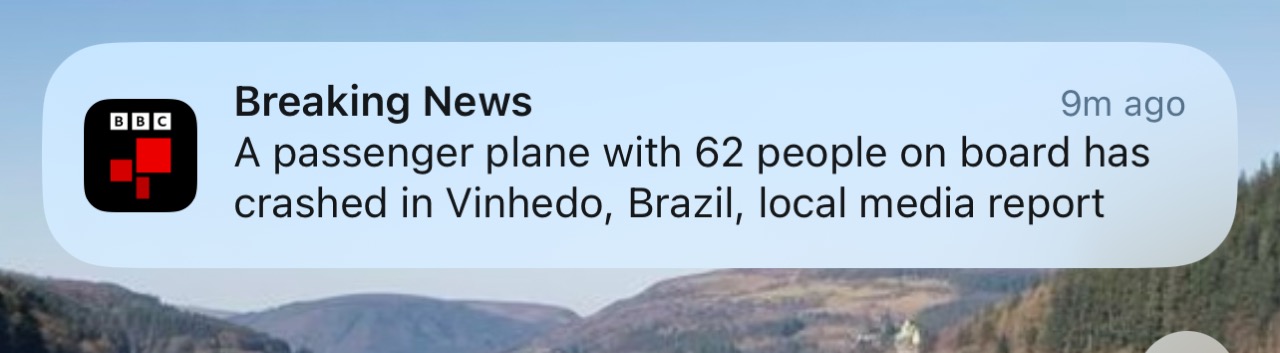

These accidents also make headline news around the world. A tragic accident killing 62 people in Brazil was reported as a flash news alert in the UK, yet every day twice as many people die on Brazilian roads. The design failings on Boeing airplanes, both software issues and door issues, resulted in the aircraft being grounded until the root cause was identified and eliminated.

If we were to apply the same standards to self-driving cars, we would not expect any (fatal) accidents caused by system failure, and any that did occur would be investigated to identify the root cause and make corrections. The regulators are unlikely to approve systems which can fail when they have an opportunity to insist on a zero-tolerance approach to mistakes.

We can also look at Waymo. In 2023 they reported statistics and whilst their cars had been in accidents, they claimed “Every vehicle-to-vehicle event involved one or more road rule violation and/or dangerous behaviors on the part of the human drivers in the other vehicle.” In other words, none were due to a failing by Waymo. In late 2023 they did have an issue after two vehicles crashed into the same towed pickup truck in Phoenix, Arizona. Waymo investigated, fixed the issue and recalled the fleet to apply the update. They have released further data since and the majority of incidents are at very low speed, and whilst some car damage may have resulted, the likllihood of injury is incredibly low. Essentially they are aiming to cause zero accidents.

There is always someone to blame.

We talk about accidents as if nobody is to blame, but that’s not the case, and there are typical consequences as a result.

Skidding on ice is not taking into account the weather conditions, through to reckless driving. Not all accidents result in the prosecution of the driver, but blame is typically always apportioned.

If we accept the Tesla premise that self-driving cars can cause accidents, so long as they are less frequent than humans, who pays if you're the innocent party? Mercedes have suggested they will take some responsibility in an accident, but their approach is to be accident free. If Tesla took responsibility car accidents as a result of a system mistake, then they could be taking on a pretty substantial commitment. Alternatively, we as individuals have to insure our cars for the car’s failure.

And liability also goes beyond accidents. What about driving offences if the car gets a speed limit wrong, or misses a stop sign? And what if the car parks itself somewhere it shouldn't? Who pays the parking fine.

Conclusion

If your justification to allow unsupervised self driving is that of safety, then systems like the Tesla FSD (supervised) with an attentive driver is going to be safer than an unsupervised system for a considerable time period.

You can also make a strong safety case that systems like Tesla FSD (supervised) technology is a ghreat safety aid, but only in its supervised mode, and could this would possibly be true in a passive mode. We're already seeingfurther passive safety changes such as speed limited cars and cabin cameras monitoring the driver which in combination could combat some of the common accident causes.

Regulators seek to achieve zero accident rates, something they have done with all other public forms of transport, and one which seems to be the standard adopted by Waymo and Mercedes. It seems unlikely that regulators would allow a threshold of failure when there are no other examples of this outside medicine.

We believe there is no plausible argument to allow an unsupervised system with an allowed threshold of accidents when the supervised version of the same system will have a much lower accident rate, and on that basis the Tesla roadmap is likely to flounder without political intervention for commercial reasons, and that is unlikely to be universally adopted.